In this article:

- Introduction

- Key Benefits of AI Inference in Edge Computing

- Use Cases of AI Inference at the Edge

- Conclusion

Introduction

As artificial intelligence (AI) continues to advance, its deployment is no longer confined to cloud computing. Instead, AI models are increasingly being embedded into edge devices such as smartphones, IoT sensors, and industrial hardware. This shift is bringing about transformative advantages across a wide range of industries. AI inference at the edge refers to the process of running pre-trained AI models directly on these local devices, enabling them to make decisions and process data without relying on remote cloud servers.

This movement towards combining AI with edge computing marks a significant shift in how data is processed and utilised. It is revolutionising real-time data analysis by delivering unparalleled benefits in speed, privacy, and efficiency. By positioning AI capabilities closer to the data source, this synergy unlocks new potential for real-time decision-making, improved security, and enhanced operational efficiency.

In this article, we will explore the key benefits of AI inference at the edge and examine its wide range of applications across different industries.

Key Benefits of AI Inference in Edge Computing

- Real-Time Processing

- Privacy & Security

- Bandwidth Efficiency

- Stability

- Energy Efficiency

- Hardware Accelerators

- Offline Operation

- Customisation

Real-Time Processing

One of the most compelling advantages of AI inference at the edge is its ability to enable real-time data processing. Traditional cloud computing often requires sending data back and forth between devices and centralised servers for analysis. This process can introduce latency due to network congestion or the physical distance between the data source and the server. In contrast, edge computing processes data locally, either on the device itself or near the data source, which significantly reduces response times.

This low-latency capability is crucial for applications that demand instantaneous action, such as autonomous vehicles, where real-time data analysis can mean the difference between safety and disaster. Similarly, industrial automation systems benefit from immediate fault detection and response, while healthcare monitoring systems can deliver critical real-time insights that can be life-saving.

Privacy and Security

One of the biggest challenges of cloud-based AI is the risk of data breaches or interception while transmitting sensitive information to remote servers. Edge computing mitigates this by keeping data processing local, thus minimising the need for extensive data transmission over potentially vulnerable networks. By handling data on edge devices, organisations can reduce the attack surface, making it more difficult for cybercriminals to access sensitive data.

This enhancement in privacy and security is especially valuable in sectors like finance, healthcare, and defence, where safeguarding sensitive information is a top priority. For instance, financial institutions can process transactional data locally, reducing the risk of exposing customer data to online threats. Similarly, healthcare systems can maintain the confidentiality of patient records while still benefiting from AI-driven diagnostics.

Bandwidth Efficiency

Edge computing also plays a significant role in improving bandwidth efficiency. By processing data locally, edge devices drastically reduce the amount of information that needs to be sent to cloud servers for further analysis. This has several key benefits:

- Reduced network congestion: Processing at the edge alleviates strain on the network, leading to smoother and faster connectivity for other critical applications.

- Lower bandwidth costs: With less data being transmitted over the internet or mobile networks, both organisations and end-users can enjoy substantial savings on data transfer costs.

- Optimised performance in remote areas: For environments with limited or expensive connectivity—such as rural areas or offshore locations—edge computing provides a practical solution by minimising the need for extensive data transfers.

In essence, edge computing makes optimal use of available network resources, enhancing the overall performance of the system.

Stability and Scalability

Edge AI provides increased stability and scalability for large-scale AI deployments. Unlike cloud-based systems that rely heavily on central servers, AI inference at the edge allows organisations to deploy additional devices as needed without overburdening the core infrastructure.

This decentralised approach also improves system resilience. For instance, in the event of network disruptions or cloud server outages, edge devices can continue to function independently, ensuring uninterrupted services. This is particularly important for mission-critical applications, such as emergency response systems or industrial machinery control, where downtime could result in severe consequences.

Energy Efficiency

Many edge devices are designed with energy efficiency in mind, making them ideal for use in energy-constrained environments. By performing AI inference locally, edge devices significantly reduce the need for energy-intensive data transmission to distant cloud servers. This leads to overall energy savings and extends the battery life of portable devices, such as wearables, smartphones, and IoT sensors.

This efficiency is vital for applications in remote locations or where power availability is limited. Additionally, industrial settings benefit from reduced operational costs, as energy-efficient devices lower the total power consumption of large-scale AI implementations.

Hardware Accelerators

The use of AI accelerators, such as NPUs (Neural Processing Units), GPUs (Graphics Processing Units), TPUs (Tensor Processing Units), and custom ASICs (Application-Specific Integrated Circuits), is critical to enabling efficient AI inference at the edge. These specialised processors are optimised for the intensive computations required by AI models, allowing for high performance and low power consumption.

By integrating hardware accelerators into edge devices, it becomes possible to run complex AI models—such as deep learning and neural networks—in real-time with minimal latency. This makes it feasible to deploy sophisticated AI systems on resource-constrained devices, opening the door to powerful, low-latency applications.

Offline Operation

Edge AI systems offer offline functionality, an important asset in scenarios where continuous internet connectivity is unreliable or unavailable. In remote environments or critical applications—such as autonomous vehicles or security systems—edge devices can continue operating and making decisions locally without requiring constant communication with a cloud server.

This offline capability also enhances data integrity. Edge AI systems can log data locally during network disruptions and synchronise with cloud servers once connectivity is restored. This ensures no loss of data and improves the reliability of mission-critical applications.

Customisation and Personalisation

The localised processing of data at the edge allows for a high degree of customisation and personalisation. AI systems can be tailored to the specific needs of individual users or operational environments, enabling real-time adjustments to behaviour or preferences. This capability is particularly useful in sectors like retail, where personalised marketing can be driven by AI insights from real-time customer data, or in smart homes, where systems can adapt to the preferences of different occupants.

By enabling custom models that operate without continuous communication with the cloud, edge AI ensures faster, more relevant responses and a more user-centric experience.

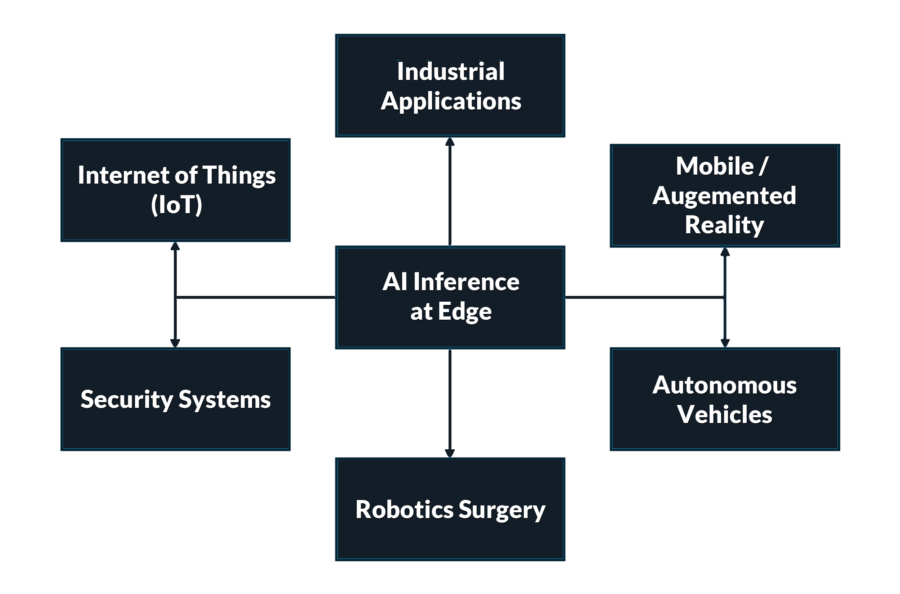

Use Cases of AI Inference at the Edge

Internet of Things (IoT)

The growth of the Internet of Things (IoT) is driven by smart sensors, which act as primary data collectors. However, centralised data processing can lead to delays and privacy concerns. Edge AI inference solves these issues by embedding intelligence directly into the sensors, enabling real-time analysis and decision-making at the source. This approach reduces latency and the need to transmit large volumes of data to central servers. As a result, smart sensors evolve from data collectors into real-time analysts, playing a crucial role in the development of IoT technologies.

Industrial Applications

In industries like manufacturing, predictive maintenance is essential for identifying potential equipment failures before they occur. Traditionally, health signals from machinery are sent to central cloud systems for AI analysis. However, the trend is shifting towards AI inference at the edge, where data is processed locally, improving system efficiency and performance while reducing costs. This allows manufacturers to obtain timely insights with minimal latency.

Mobile and Augmented Reality (AR)

Mobile and augmented reality (AR) applications generate large amounts of data from sources like cameras, Lidar, and audio inputs. For a seamless AR experience, this data must be processed within a latency window of 15-20 milliseconds. AI models, combined with specialised processors and advanced communication technologies, enable real-time analysis and autonomy at the edge. This integration reduces latency and improves energy efficiency, making it a critical component in the evolution of mobile and AR technologies.

Edge AI is crucial in meeting these latency requirements. By processing data locally on the device, AI models can deliver real-time analysis, enabling smooth AR experiences. Additionally, edge AI improves energy efficiency, making it an indispensable technology for next-generation mobile and AR applications.

Security Systems

In modern security systems, the integration of AI-powered edge analytics with video cameras is revolutionising how threats are detected and addressed. Traditionally, video footage from multiple cameras is transmitted to central cloud servers for AI analysis. However, this method can introduce delays due to network congestion and the time it takes to process data in a centralised location. These delays are critical in environments where immediate responses are necessary, such as airports, border security, and government facilities.

With AI inference at the edge, video analytics can be performed directly within the camera or close to the data source. This capability allows for real-time threat detection, enabling systems to instantly assess potential dangers, such as unauthorised access or suspicious activities. In the case of an urgent threat, the system can instantly notify security personnel or authorities, drastically improving response times.

This localised approach not only enhances security but also ensures that sensitive video data does not need to be transmitted over the internet, thus reducing the risks of cybersecurity breaches. Moreover, the reduced reliance on cloud servers means that edge AI-powered security cameras can operate effectively even in remote locations with limited internet access, ensuring uninterrupted security monitoring.

Robotics Surgery

In the field of robotic surgery, AI inference at the edge is playing a pivotal role in advancing the capabilities of remote surgical procedures. Remote robotic surgery allows surgeons to perform highly complex and precise operations from a distant location, using AI-powered robotic systems to execute their commands. These robotic systems rely on real-time communication and ultra-low latency to ensure that every movement is accurate and performed at the right moment.

Edge AI is critical in this context as it enables the surgical robots to process data locally, ensuring that they can make instantaneous decisions and adjustments during the procedure. This is especially important in life-or-death situations, where even the slightest delay could have serious consequences. By performing AI inference locally, the robotic systems can continuously monitor the patient’s vital signs, adapt to changes, and communicate with the surgeon in real time, all while ensuring that the operation is conducted safely and efficiently.

In addition to real-time responsiveness, edge AI in robotic surgery ensures greater reliability and fail-safe operation. In the event of a network outage or other technical difficulties, the system can continue to function autonomously, storing and processing essential data to maintain the flow of the procedure.

Autonomous Vehicles

The development of autonomous vehicles represents a monumental leap in technology, with AI inference at the edge serving as a cornerstone of this advancement. Autonomous vehicles, such as self-driving cars, rely on AI to process vast amounts of data from multiple sensors, including cameras, LiDAR, radar, and ultrasonic sensors. These sensors continuously collect real-time data on the vehicle’s surroundings, such as road conditions, nearby obstacles, and other vehicles.

AI accelerators embedded within the vehicle enable rapid real-time decision-making by processing this data locally on the edge, without the need to transmit it to remote servers for analysis. This immediate processing is crucial for allowing the vehicle to respond to changing road conditions, such as sudden obstacles or unpredictable driver behaviour, with minimal latency.

By integrating AI at the edge, autonomous vehicles can make split-second decisions that enhance safety and efficiency. For instance, they can automatically brake to avoid a collision or adjust their speed when entering a curve. This localised processing reduces the vehicle’s reliance on external networks, ensuring that it can continue to function even in areas with poor connectivity, such as rural roads or tunnels. Furthermore, edge AI ensures that self-driving cars can operate in real-time even in high-stakes environments, such as busy city streets or highways, promoting safer transportation systems overall.

Conclusion

The integration of AI inference in edge computing is transforming industries by enabling real-time decision-making, enhancing security, and optimising bandwidth, scalability, and energy efficiency. By bringing AI capabilities closer to the source of data generation, edge computing is revolutionising sectors such as security, healthcare, and autonomous driving, offering benefits such as reduced latency, improved reliability, and enhanced privacy.

In security systems, edge AI allows for immediate threat detection and quicker responses, significantly improving the security of critical locations. In robotic surgery, edge AI provides the real-time responsiveness needed to perform complex procedures with precision, even from remote locations. Meanwhile, autonomous vehicles benefit from edge AI’s ability to process data on the spot, enabling rapid decision-making and ensuring safe navigation in dynamic environments.

As AI continues to evolve, its applications in edge computing will expand, fostering innovation and increasing efficiency across a wide range of industries. However, despite the numerous advantages, challenges remain. Ensuring the accuracy and performance of AI models deployed at the edge is an ongoing area of research and development. Factors such as resource constraints, model optimisation, and reliable deployment must be addressed to realise the full potential of edge AI.

As these challenges are overcome, edge AI will continue to drive significant improvements across various domains, shaping the future of intelligent, decentralised computing and setting a new standard for real-time analytics. The future of AI lies in its ability to process data where it is generated, delivering faster, safer, and more efficient outcomes across industries.